Rethinking Copyright in the Age of Generative AI

Striking a balance between incentives and innovation

An urgent call for intentional design and transparent governance

Humanity is giving birth to something new. An emergent intelligence that is about to impact society in profound ways.

New technologies like artificial intelligence and machine learning hold tremendous potential to accelerate solutions to humanity’s greatest challenges – from combating climate change to reducing poverty. However, whether AI’s disruptive capacity uplifts or further marginalizes communities will depend heavily on how purposefully its development and application are guided.

As algorithms play growing roles in areas like healthcare, education, finance, and governance, thoughtful oversight is required to prevent baked-in biases that could deny opportunities to underserved groups. Without deliberate alignment to ethical goals, AI risks exacerbating historic inequities and undermining human dignity.

Nowhere are these tensions starker than in domains like community development and impact investing that explicitly aim to expand prospects for underserved populations. As AI penetrates these spaces, maintaining alignment with social justice values demands vigilance.

Here are some key ways that AI could affect the impact investing sector, both through misalignment that causes harm and alignment that fosters empowerment:

Overall, intentional collaboration with communities, transparency in AI systems, participatory design processes, and integrating automation cautiously to augment rather than override human judgment will be critical to steer AI towards expanding opportunities equitably in the impact investing field.

Like any technology, if created without care, AI systems can easily absorb and amplify existing prejudices:

Without deliberate countermeasures, these dynamics will steer innovation towards concentrating power among the already privileged few. The goals of community development to challenge unjust power structures will be profoundly undercut.

In domains focused on economic and social justice, premature AI adoption without ethical guardrails could:

Each manifestation further divorces technology deployments from the needs and aspirations of marginalized peoples they were intended to serve.

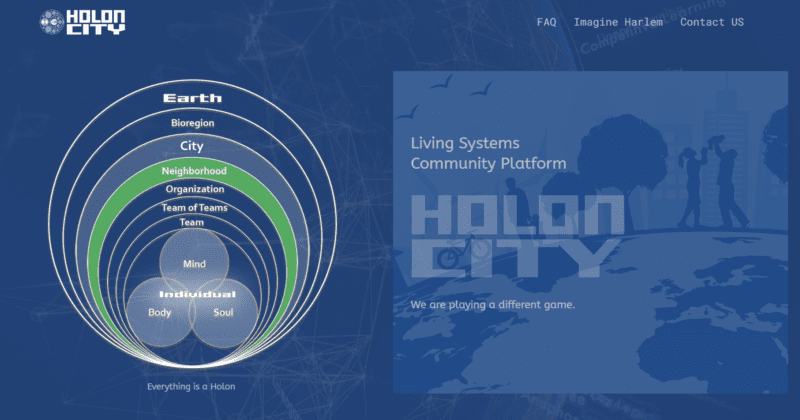

So how can we steer innovation toward empowerment rather than exploitation? Community alignment and empowerment can be achieved through systems-based frameworks like Holon City through the responsible alignment of AI models. The platform facilitates the participatory design of AI systems to ensure they reflect community values. Residents are engaged in providing data and feedback to optimize algorithms for local contexts.

Holon City is a Living Systems based framework for community development and AI alignment.

The goal is to link knowledge across fields, enabling innovative solutions to social, economic, and environmental challenges. The platform facilitates the shift toward localized, equitable models of production and consumption that amplify community creativity. With thoughtful implementation, AI can be a tool for communities to co-create their desired future.

The Beauty of Community campaign will engage the Upper Manhattan community in an art contest using AI generation to showcase the culture and aesthetics of the neighborhood. Participants will incorporate local styles, symbols, and perspectives into AI-generated artworks that capture the essence of the community. The art-based campaign will help to create the community-focused real-world data needed to address misalignments already inherent in current AI systems.

Imagine Harlem will be the first implementation of the community impact engagement platform.

Imagine Harlem meanwhile convenes stakeholders across sectors to guide innovation transparently towards sustainability, economic inclusion, and cultural heritage preservation. With community oversight, the likelihood of AI benefiting all increases.

These examples embody principles for ethical AI design:

Impact investing aims for financial returns alongside social change – improved health, economic opportunity, sustainability, and more. As data-hungry AI enters impact work, vigilance is required to prevent creeping dehumanization and extraction under the guise of progress.

Communities themselves must retain sovereignty over their narratives and capital. Local participatory bodies, not distant technocrats, should determine ethical constraints and acceptable use cases for AI in impact programs. Impact metrics should capture holistic community well-being, not just efficient narrow outcomes.

Policy, incentives, and education for impact investors must emphasize community empowerment as central to “doing good.” Marginalized groups should control contesting datasets that counter biased narratives with human truths.

To mainstream this vision and prevent harmful AI, we must:

The accelerating pace of technological change will strain our social systems. But by connecting innovation to ethics and community self-determination, AI and automation can uplift rather than endanger. Our task ahead is to guide science towards serving solidarity and justice – not as a futile stance against progress but rather as a lens focusing on it wisely. If ethical goals align with pragmatic impact, the humanistic path can prevail.

Related Content

Comments

Deep Dives

RECENT

Editor's Picks

Webinars

Featuring

Lizz Welch & Jennifer Roglà

iDE

May 16 - 12:00 PM EST

Impact Encounters

May 22 - 6:30 PM EST

News & Events

Subscribe to our newsletter to receive updates about new Magazine content and upcoming webinars, deep dives, and events.

Become a Premium Member to access the full library of webinars and deep dives, exclusive membership portal, member directory, message board, and curated live chats.

0 Comments